Pasta-Gate: The Dangers and Limitations of Artificial Intelligence in Journalism

AI-based technologies are very promising, but there are certain areas where their application can be problematic.

The ongoing development of artificial intelligence (AI) has undoubtedly revolutionized numerous industries, including journalism. However, while AI-based technologies are promising, there are certain areas where their application can be problematic. Recently, a special issue of Burda was published with 99 pasta recipes that were largely generated by an AI. This example illustrates some of the dangers and limitations that can arise in connection with AI in journalism.

Lack of Contextulization

AI-generated texts can have considerable flaws due to a lack of contextualization. The AI can gather and analyze information, but it does not understand social or the cultural context in which it is used. In the case of a special recipe issue, this can result in recipes that are technically correct, but make little sense in their composition and presentation. The AI cannot understand the subtle nuances of cooking that a human cook intuitively grasps.

Lack of Journalistic Ethics

Another problem with the use of AI in journalism is the lack of ability to consider journalistic ethics. Journalists have a responsibility to provide accurate, balanced and ethical reporting. AI can analyze data and recognize patterns, but it cannot make moral or ethical decisions. The danger is that AI-based journalism tools may provide unreliable or biased information based on unintentional errors in the data used.

Lack of Human Intuition

Human intuition is absolutely invaluable in journalism. Experienced journalists can understand complex issues, establish context, recognize subtle signals, and draw well-founded conclusions. AI, on the other hand, is based on algorithms and predefined rules that are limited in their flexibility. It may not be able to adequately capture the complex nuances of human stories or the ramifications of events. Without human intuition, AI systems might perform flawed analyses or overlook important information.

Reinforcement of Prejudices

AI systems learn from the data that they're trained on. If these data contain prejudices or discrimination, there's the danger that the AI will reinforce these prejudices or even unknowingly produce racist, sexist, or otherwise problematic content. In journalism this can lead to inaccurate or biased reporting that discriminates against or stigmatizes certain groups of people.

Conclusion

AI undoubtedly has the potential to enrich journalism and optimize processes. However, it is important to recognize the dangers and limitations of this technology. Lack of contextualization, lack of journalistic ethics, the absence of human intuition and the reinforcement of biases are just some of the challenges that need to be overcome. The integration of AI in journalism therefore requires careful consideration to ensure that the technology serves as a tool to support human journalists and does not replace their role; human judgment, empathy and ethical responsibility remain essential elements for high-quality reporting.

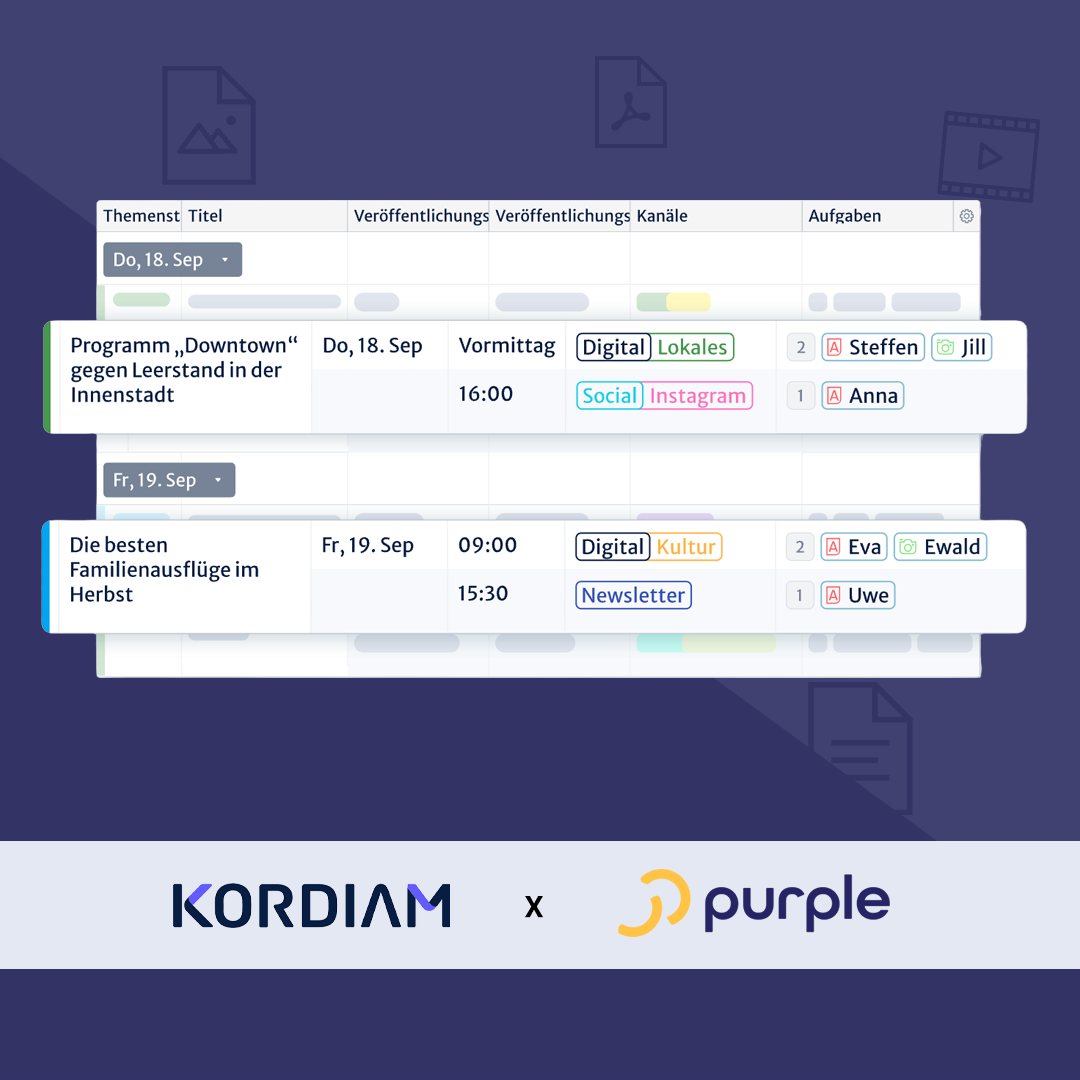

If you are interested in further exploring and utilizing the possibilities of artificial intelligence in journalism, then you should get in touch with us. Purple is a leading company that develops innovative AI features to make workflows more efficient and support journalists in their work.

Let's explore the possibilities of AI in journalism together.

The future of journalism lies in intelligent collaboration between people and technology. Purple can help you shape this future. Sign up today and discover the exciting possibilities of artificial intelligence in journalism!

%201.svg)